Article

Dec 30, 2025

Why Customer Feedback Management Breaks at Scale

Product managers spend more time organizing customer feedback than analyzing it. Learn why manual synthesis breaks down at scale and what automated unstructured feedback synthesis could look like.

It's Friday afternoon. You've got a roadmap planning meeting on Monday and you need to come prepared with data on what customers have been asking for this past quarter.

So you request a summary from your head of support. Then you scroll through three weeks of Slack messages where sales reps tagged you with "customer feedback." You pull up transcripts from sales calls and put them in your own custom GPT to summarize and extract key topics per call. You check that shared Google Doc where CSMs occasionally pastes quotes from their QBRs.

At the end of the day you have a spreadsheet with 73 rows of feedback. You've tagged them by theme. You've tried to count how many times "performance issues" came up, but you're not sure if "dashboard is slow," "reports time out," and "can't export large datasets" are the same problem or three different ones.

And you know that by Monday, there will be 15 more pieces of feedback scattered across tools you haven't even checked yet.

This is what customer feedback looks like at scale. And it's broken.

The Problem Isn't Volume

When you had 50 customers and two support tickets a week, you could remember everything. You knew exactly who asked for what and could spot patterns because they were obvious.

But somewhere between 50 and 500 customers, that stopped working. Simply because manual categorisation of unstructured feedback doesn't scale. If you would still go through everything you would spend more time organizing feedback than analyzing it. So a lot of customer contact just gets ignored while you refine your roadmap.

You have all data you need, just not in one place and it's not easy to work with.

The lack of insights is disrupting your roadmap

Your CEO forwards you one customer email. Suddenly that feature feels urgent, even though 40 customers quietly mentioned something else across the last three months. But you don't have an easy way to prove that, so the loud voice wins.

You can't confidently answer "how many customers asked for this?" You know someone asked. You think others did too. But you can't prove it without spending hours digging. So you hedge. You say "several customers" when what you mean is "I'm not really sure, but I think it's important."

Every prioritization meeting turns into "who remembers the most recent example." Your roadmap discussions are based on whoever has the best memory or the most Slack screenshots, not actual patterns in the data. This means your roadmap is being decided by recency bias, not data.

The result is that you end up building the wrong things.

Synthesizing Unstructured Feedback at Scale becomes impossible

Let me give examples of the three core problems that break at scale:

Problem 1: Feedback is scattered everywhere.

Support tickets in Zendesk. Sales notes in HubSpot. Feedback in Slack. That one really important conversation buried in your inbox. There's no single place to see it all.

Problem 2: The same problem shows up in different words.

"Dashboard is slow." "Reports time out." "Can't export large datasets." "Performance issues with big data." These are all describing the same underlying issue, but they're in four different places, phrased four different ways. You have to connect the dots or it goes unnoticed as one-offs.

This is the challenge of unstructured feedback. Unlike structured survey responses or feature voting, unstructured feedback comes in natural language. It's messy, inconsistent, requires interpretation, and it requires someone to recognize that different words are describing the same need.

Problem 3: You can't easily quantify anything.

How many customers mentioned this? When did it start trending up? Is this an enterprise problem or everyone? You know the answers are in your tools somewhere, but getting them requires manual archaeology.

Why Unstructured Feedback Is Both Essential and Impossible

Here's the paradox: unstructured feedback is often the most valuable feedback you can get. This kind of feedback is rich, nuanced, and tells you not just what customers want, but why they want it and how they experience the problem. It often presents itself at the moment the client experiences a problem or when a functionality would actually make their life easier.

But it is impossible to work with at scale. Structured feedback tools like feature voting boards or NPS surveys give you clean, quantifiable data. But they miss all the context, all the nuance, all the unexpected insights that come from unstructured conversations. They force customers to pick from your predetermined options instead of telling you what they actually think and response rates are often low.

What this could look like

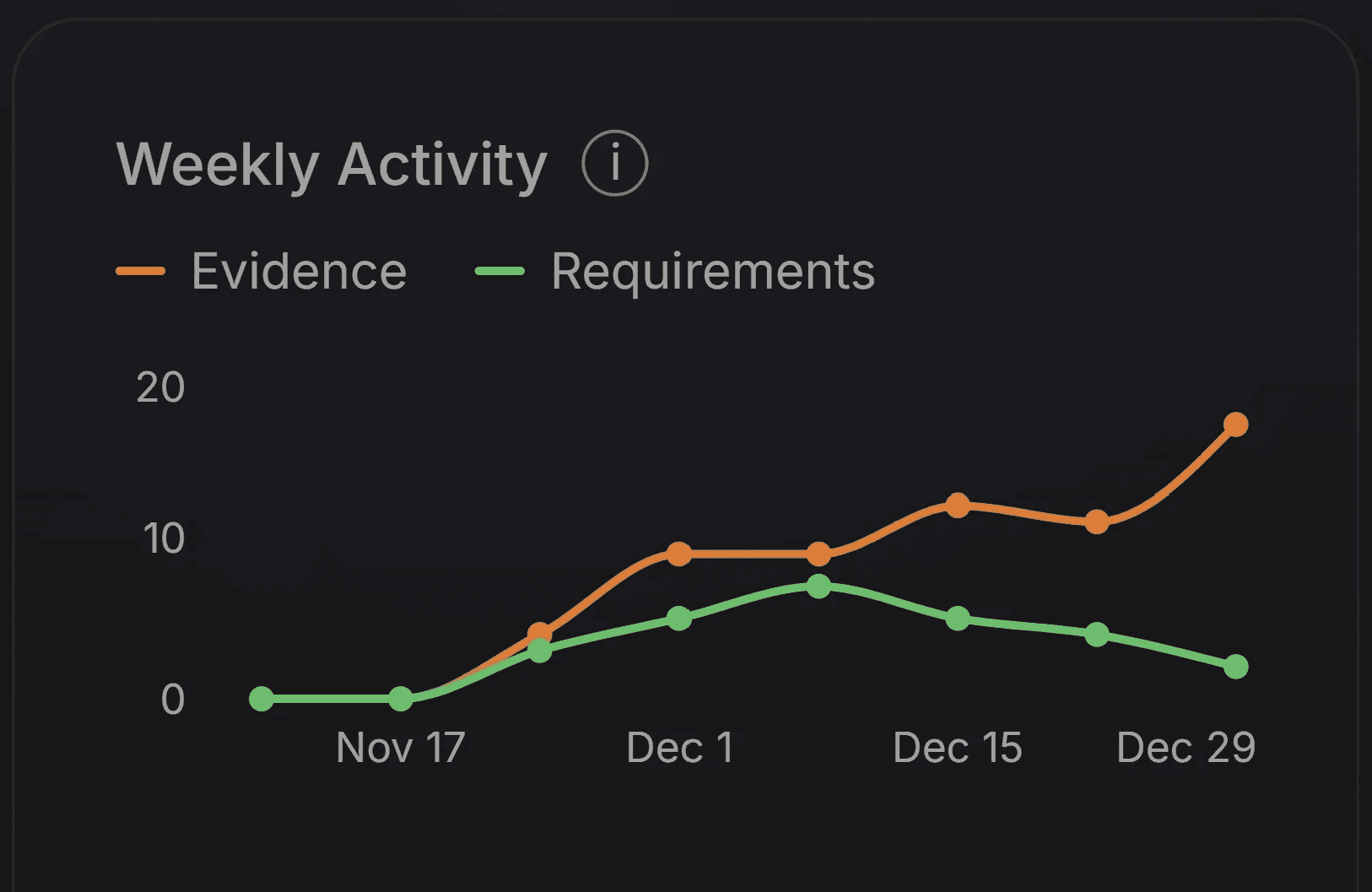

The solution isn't to stop collecting unstructured feedback, but that it happens automatically and at scale so you can focus on the how instead of the what. You need to be able to see, that 47 customers mentioned this in the last quarter, that it's trending up, and how much revenue is tied to those customers.

We are not there yet, but we are getting there and are running BETA tests with a small group of clients now. Want to join us? sign up for the the waitlist or drop me a message directly if you want join the BETA program at Marty@productpulse.ai.